Artificial Intelligence (AI) is no longer science fiction—it's now, revolutionizing sectors, automating processes, and improving decision-making. Yet as AI develops, so do the ethical, security, and social issues it raises. Anticipatory AI development is all about foreseeing those issues and designing AI systems that are not merely capable but also responsible, equitable, and respectful of human values.

Proactive AI Development: Shaping the Future Responsibly

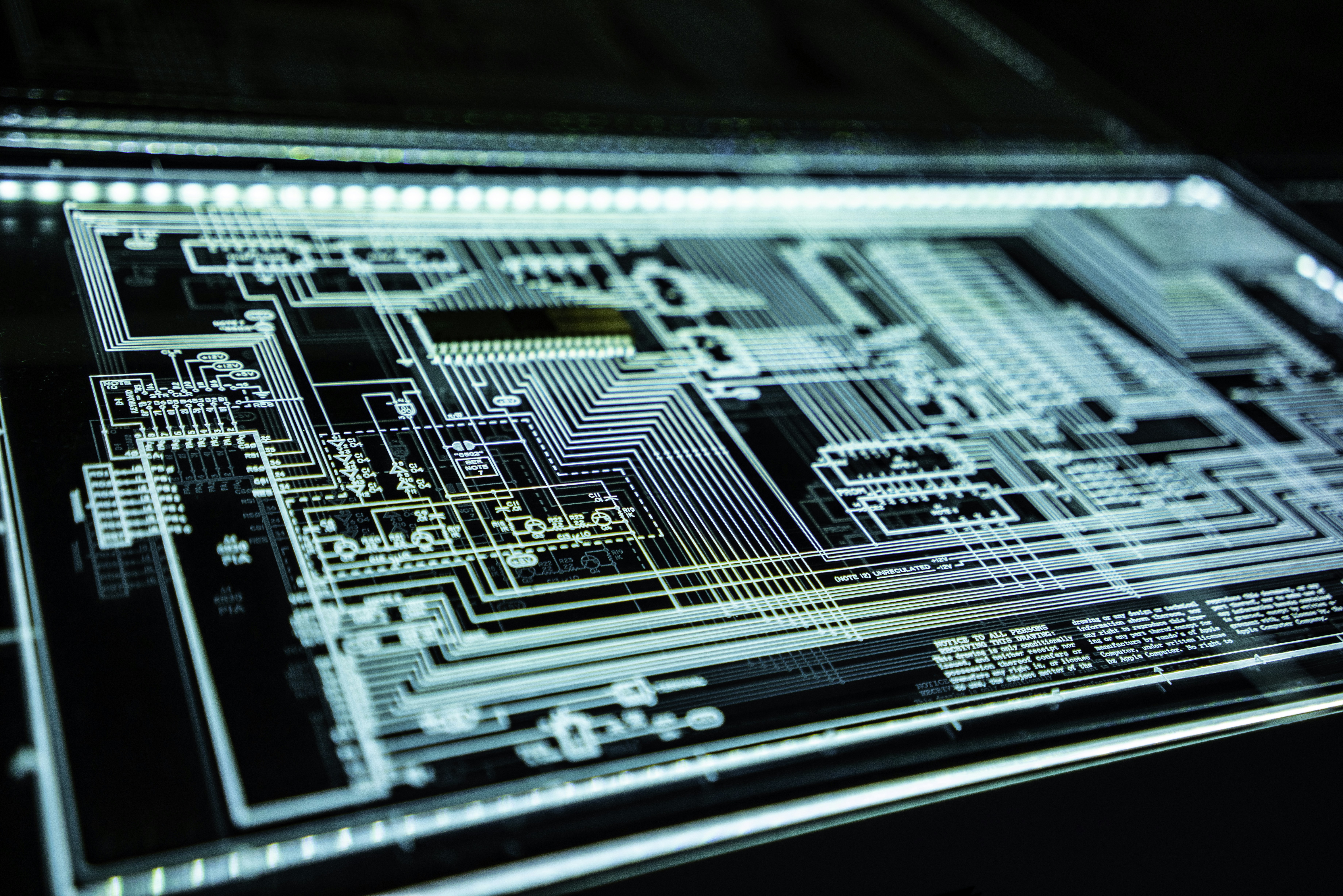

What is Proactive AI Development?

Image Credit: Unsplash

Proactive AI development means designing, testing, and implementing AI systems with anticipation—solving possible risks and ethical issues before they become significant problems. In contrast to reactive methods (repairing issues once they occur), proactive AI focuses on:

Ethical AI – Ensuring fairness, transparency, and accountability.

Bias Mitigation – Avoiding discriminatory results in AI models.

Security & Robustness – Protection from adversarial attacks and abuse.

Human-Centric Design – Bringing AI in line with societal values and needs.

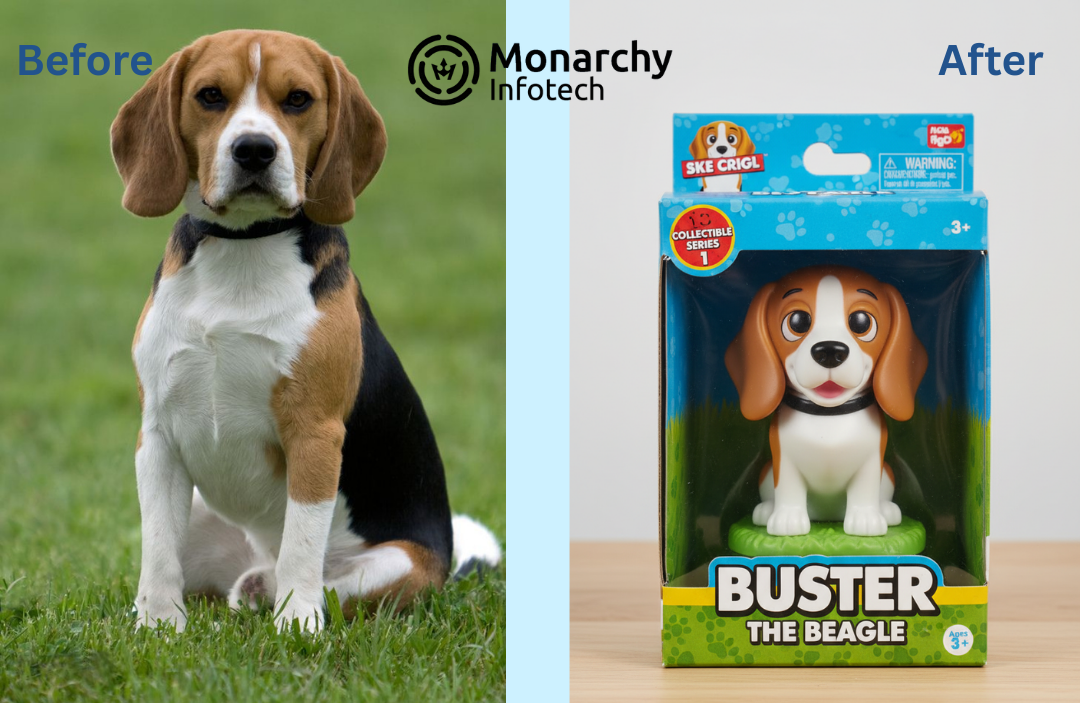

Why Proactive AI Development Matters

Image Credit: Unsplash

1. Avoiding Harm Before It Occurs

AI systems can sometimes unwittingly carry on bias, invade privacy, or produce destructive choices. Measures in advance—such as bias audits and moral standards—foreclose these.

2. Creating Trust with AI

The public's confidence in AI is precarious. Addressing risks early, businesses create faith among people, regulators, and stakeholders.

3. Compliance with Laws

Governments around the globe are implementing AI regulations (e.g., EU AI Act, U.S. AI Executive Order). Anticipatory development maintains compliance and steers clear of legal traps.

4. Long-Term Sustainability

AI developed responsibly today will be more resilient and sustainable in the long term, minimizing expensive repairs and damage to reputation.

Key Strategies for Proactive AI Development

1. Ethical AI Frameworks

Implement guidelines such as:

- Fairness – Prevent AI from discriminating on the basis of race, gender, etc.

- Transparency – Make AI decisions transparent (XAI).

- Accountability – Attribute responsibility for AI results.

2. Bias Detection & Mitigation

Train models using diverse datasets.

Perform periodic bias audits using tools such as IBM's AI Fairness 360 or Google's What-If Tool.

3. Robust Security Measures

Shield AI models from adversarial attacks (e.g., data poisoning).

Apply differential privacy to protect user data.

4. Continuous Monitoring & Feedback Loops

Implement AI monitoring software to catch anomalies in real time.

Engage user feedback to enhance AI behavior.

5. Collaboration & Multidisciplinary Teams

Include ethicists, sociologists, and lawyers in AI design.

Consult with policymakers and industry associations to develop best practices.

Real-World Examples of Proactive AI

1. Google's Responsible AI Practices

Google applies TCAV (Testing with Concept Activation Vectors) to understand AI judgments and minimize bias in models such as image recognition.

2. Microsoft's AI Principles

Microsoft has an AI Ethics Committee and a tool such as Fairlearn to evaluate and offset unfairness in AI systems.

3. IBM's AI Explainability Toolkit

IBM makes available open-source software to provide clarity on AI decisions so that businesses can gain confidence in AI-based insights.

The Future of Proactive AI

As AI increases autonomy, proactive development will be essential for:

- Autonomous Vehicles – Safety and ethical decision-making.

- Healthcare AI – Avoiding misdiagnoses and biased treatment suggestions.

- Generative AI – Reducing misinformation and deepfake threats.